The AI market is seeing explosive growth and is expected to hit $280 billion by 2025. Across industries and company sizes, AI adoption is on the rise, with a remarkable 87% of global organizations believing that AI technologies will give them a competitive edge.

It’s clear that AI technology is transforming how we do business, and many companies are looking to explore how to build an AI product themselves. With the increased demand for AI products - both internal business-oriented applications and those that power customer-facing applications

In this article we uncover the biggest challenges you’re up against as well as provide 12 do’s and don’ts to help you navigate the process of building your own AI product in 2024.

Key Challenges in Building AI Systems

Artificial Intelligence (AI) has undoubtedly transformed various industries, promising innovative solutions and unprecedented capabilities. However, realizing the potential of AI-driven products comes with its fair share of challenges, including:

- Selecting the right application that resonates with users and achieves a coveted product-market fit

- Safeguarding user data and ensuring AI systems are resilient to cyber threats

- Sourcing high-quality data that will be sufficiently vast enough to provide accurate, trustworthy results

- Making AI models transparent and interpretable, particularly in industries with regulatory requirements

- Managing ethical dilemmas that can be inherent in the integration of AI into user-facing applications

In this rapidly evolving industry, overcoming these challenges is not an easy task. To help you navigate the challenges, we’ve put together 12 do’s and don’ts:

How to Build an AI Product - 6 Dos

Refine your AI product concept

Before diving into the technical intricacies, it's crucial to refine your AI product concept to ensure it addresses a real problem or provides substantial value. This initial step sets the stage for the entire development process and plays a pivotal role in its success.

First, the AI product should be truly valuable to users.

The first thing to consider when refining your AI product concept is the value it brings to users - whether internal business users or external customers. Ask yourself: What problem are you solving for people, and how can your AI product make their lives better? The key is to choose a high-impact use case that provides tangible benefits and positively transforms users' everyday experiences. That as a guide, you can refine your idea, by considering:

- Integration and practicality: Make sure your AI product integrates seamlessly with the tools your users already employ or fits naturally into their existing workflows. Practicality and ease of use are essential for user adoption.

- Efficiency and automation: Consider how your AI product can save time, automate tasks, or reduce effort for users. Efficiency gains are a powerful incentive for embracing new technology.

- Personalization: Aim to provide personalized support by offering relevant information or assistance tailored to individual user needs. Personalization enhances user engagement and satisfaction.

- Anticipation: Explore ways in which your AI product can anticipate user needs. This is where AI can stand out and provide truly new value over traditional software apps.

Second, the AI product needs to provide sufficient accuracy to build users' trust.

Trust is a cornerstone of any successful AI product. It’s not enough to have a great idea. To earn the confidence of your users, you must choose a use case that you can execute with sufficient accuracy. This is a critical consideration that often determines the long-term success of AI applications.

The level of accuracy you can achieve largely depends on the data you have access to. Be thoughtful about how data limitations might impact your product's performance. Achieving high accuracy is typically easier in narrow, well-defined business applications with abundant high-quality, specific data.

Conversely, wide applications may require extensive training data and face greater challenges in maintaining accuracy. In general, it’s best to focus on building artificial narrow intelligence (focusing on a specific niche) rather than artificial general intelligence (something like ChatGPT).

Evaluate data availability and quality

Data is gold when you build an AI system. Early in the project, you’ll need to put together a data plan that will define both the scale of data that is needed to achieve the level of accuracy required as well as the source of the data.

First let’s talk about the scale of data. For most business applications, you won’t be building an AI from scratch; instead, you’ll likely leverage an existing LLM (like GPT-4 or LlaMA) for its natural language processing capabilities and general intelligence.

So, when we talk about the scale of data, it’s all about what business data you’ll need to augment these LLMs. Generally speaking, the broader the application, the larger the scope of data needed to produce accurate results.

Now, consider where you will source your data. There are many options to consider, including:

- Data you product already has: If AI capabilities are built on top of an already existing product, you may have structured or unstructured data that’s been collected by your product that you wish to use. All you would need to do in this case is organize and make it machine learning friendly (data cleansing).

- Automated data collection: This involves using automated tools and scripts to scrape data from websites and other sources. This can be less expensive than manual data collection, but the quality of the data may not be as high.

- Purchased datasets: Buying datasets from third-party data providers can be a cost-effective way to get large amounts of data quickly, but it may require additional work to clean the data of any irrelevant or incorrect information.

Carefully weigh AI technology tradeoffs

When building your own AI system, you will encounter various trade-offs that need to be carefully considered:

- Accuracy vs. Speed. Balancing the level of accuracy with the time taken to process AI algorithms.

- Complexity vs. Simplicity. Striking a balance between the complexity of AI models and their ease of understanding and maintenance

- Explainability vs. Performance. Balancing the interpretability of AI models with their performance and accuracy.

- Your use case requirements. Do you need the model to run within your environment? Do you need a larger context window? Are you using a language other than English?

Selecting the right AI technology for your AI product is a pivotal decision, and it requires a thorough evaluation of various factors. Let’s look at a few examples:

- Public OpenAI API is always the latest version and it is much easier to get started with compared to deploying and managing Azure OpenAI Service.

- LlaMA 2 is much faster and cheaper than GPT-4, making it a good choice for tasks that require real-time performance, but may not be able to generate or process very long inputs or outputs.

- GPT-4 is a multimodal model, which means that it can generate and process text, images, and other types of data. Llama 2 is a text-only model.

Choose the right AI model optimization strategy

While AI models are trained on a great deal of data, they are not trained on your business data, which may be private or specific to the problem you’re trying to solve. Each generative AI model optimization strategy has its unique strengths:

- Model training: Involves building an AI model from scratch, requiring significant data and computational resources (not to mention technical data science and programming language skills). The process to develop AI models is highly customizable and scalable but time-consuming to gather data and train from the ground up.

- Fine-tuning: Focuses on adapting an existing model to a specific task, offering a balance between customization and efficiency.

- Retrieval-augmented generation (RAG): Enhances models by integrating external knowledge sources, ideal for tasks needing current or company-specific information.

- Prompt engineering: Relies on crafting effective prompts to guide pre-trained models, requiring skill in prompt design but minimal computational resources. This method is not only cost-effective but also highly effective, yet its potential is frequently underestimated.

The most appropriate AI model optimization strategy depends on factors like data availability, computational resources, task specificity, the need for up-to-date information, and required skills.

PRO TIP: Consider using a more specialized AI software framework for building search and retrieval applications like LlamaIndex which provides the following tools:

- Data connectors ingest your existing data from their native source and format. These could be APIs, PDFs, SQL, and (much) more.

- Data indexes structure your data in intermediate representations that are easy and performant for LLMs to consume.

- Engines provide natural language access to your data. For example:

- Query engines are powerful retrieval interfaces for knowledge-augmented output.

- Chat engines are conversational interfaces for multi-message, “back and forth” interactions with your data.

- Data agents are LLM-powered knowledge workers augmented by tools, from simple helper functions to API integrations and more.

- Application integrations tie LlamaIndex back into the rest of your ecosystem. This could be LangChain, ChatGPT, or any external API integration.

Read More: Differences between Langchain & LlamaIndex

Embrace an iterative development process

Embracing an iterative development process is not just a recommendation but a fundamental necessity when working with AI products, as they often lack a definitive "right" solution from the outset. Unlike traditional software development, where it is often possible to plan and scope the project in advance, AI projects require a dynamic approach that involves exploring multiple iterations and proof of concepts (PoCs) to uncover the most optimal solution.

Even after you have a working concept, you can continue to refine your AI product on multiple fronts:

- AI-enhanced UX: Elevate the user experience by continuously refining the AI's interaction design, responses, and features. Leverage user feedback and behavioral insights to streamline and enrich the user journey.

- Model performance: Commit to improving your AI model's performance iteratively. Regularly assess its accuracy, precision, recall, and other relevant metrics. Fine-tune the model to reduce errors and enhance its predictive capabilities.

- Data refinement: Enhance the quality of your AI by continuously improving the data that fuels it. Early data generated from user interactions should be harnessed to refine the model. Use this feedback loop to iteratively train the model with more accurate, diverse, and relevant data.

- Feature expansion: Gradually expand the scope of features and functionalities offered by your AI product. You might begin with it solving a single particular task, but as your understanding of user needs grows, incrementally introduce new features to meet evolving requirements.

PRO TIP: Building AI products requires ongoing maintenance, data updates, and fine-tuning to adapt to changing environments. Companies that treat AI as a one-time project instead of an ongoing initiative often find that their systems become obsolete or ineffective.

Plan to adopt a continuous improvement mindset when it comes to AI. Regularly monitor, update, and fine-tune your AI systems to keep them relevant and accurate as situations and data change.

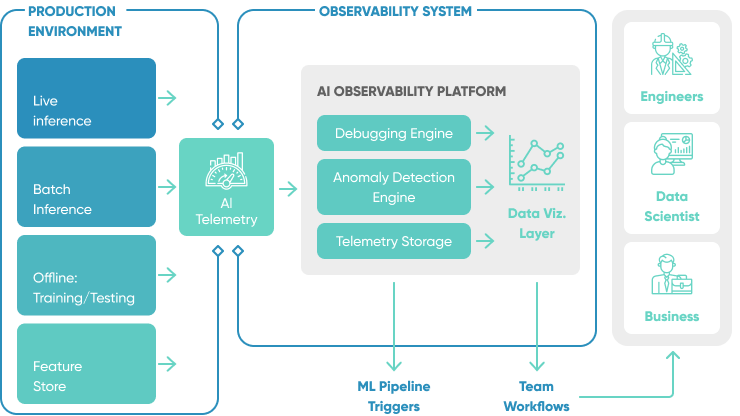

WhyLabs is an observability platform designed to monitor data pipelines and ML applications for data quality regressions, data drift, and model performance degradation. Built on top of an open-source package called whylogs.

Design with usability in mind from the start

A successful AI product hinges on delivering a user experience that is both intuitive and engaging. To achieve this, it's essential to consider human-computer interaction fundamentals and research to understand what truly matters in user-centric design. This involves understanding how users interact with technology, their expectations, and cognitive processes.

Consider the following questions as you begin to develop or enhance your AI solution:

- What type of user interaction will best suit your AI product - chat interface, speech recognition, or a robust user interface with buttons and menus?

- How much control do you want to give users over their interactions with AI?

- Should users have the ability to customize settings and preferences?

- Will there be human staff or users acting as "checks" on the AI's outputs and decisions?

- When and how frequently should the AI prompt users for input or guidance?

- How can you design prompts and questions that are contextually relevant and feel natural within the user's workflow or conversation?

- What features can you provide to enable users to manage their interactions, review past interactions, or adjust their preferences?

- How will the AI tool handle errors or misunderstandings in user input, and how can the user interface facilitate smooth error recovery?

PRO TIP: Incorporate user-friendly mechanisms that invite users to provide feedback on the AI's behavior. Whether through ratings, comments, or direct interactions, these channels offer valuable insights. Combine user input with the analysis of real user behavior to guide iterative enhancements to the user experience.

Read More: Designing for AI: 12 Expert Tips for Human-Centered Design

How to Build an AI Product - 6 Don’ts

It's crucial to recognize potential pitfalls that could undermine the AI development process. Steering clear of these 6 “don’ts” is vital to ensure your team delivers a user-centric, business-oriented AI product:

Ignore the need for human oversight

Human involvement is essential in overseeing AI systems, checking their work, and facilitating the ongoing training process. By thoughtfully integrating humans into the AI workflow, we can enhance trust in the system and ensure responsible AI use. Here's how to strike that balance effectively:

- Be clear about what the AI can and can’t do: One of the cornerstones of responsible AI is transparency. Clear communication is essential for setting realistic expectations, guiding users on interacting with the AI, avoiding misunderstandings, and preventing misuse.

- Prioritize user onboarding and feedback: Include information about the AI's abilities and limits during user onboarding using interactive tutorials or guided tours. If the AI can't perform a requested action, provide clear feedback and, if possible, suggest an alternative.

- Identify key points in the AI workflow where human intervention and oversight are essential. Humans can act as checks to review and verify AI-generated outputs, especially in critical or high-stakes scenarios. This not only helps ensure accuracy but also reinforces user trust in the system.

Neglect talent acquisition and development

AI is a complex field that requires specialized skills. Many companies that are creating AI strategies fail to invest in acquiring and developing the right talent for their initiatives. Not having the right skills for AI is often the cause of project failures.

In many cases, companies need data scientists, machine learning engineers, and software developers familiar with AI technologies and a diverse range of programming languages. Businesses should put plans in place to recruit new employees with these skill sets or upskill their existing employees to fill these critical roles.

PRO TIP: Work with the right partners who have expertise in AI development. Whether you work with a consultant or a full-service development team, this can help take some of the guesswork out of AI development and allow you to see tangible results faster.

See how our custom AI development company can help you!

Fail to adequately test and validate AI systems

Many challenges hinder the creation of high-quality, production-grade AI software, including:

- Non-deterministic outputs: AI models are probabilistic and can produce different outputs for the same prompt (even with a 0 temperature setting as model weights are not guaranteed to be static over time).

- API opacity: Models behind APIs change over time

- Security: LLMs are vulnerable to prompt injections

- Bias: LLMs encode biases that can create negative experiences

- Cost: State-of-the-art models can be expensive

- Latency: Most experiences need to be fast

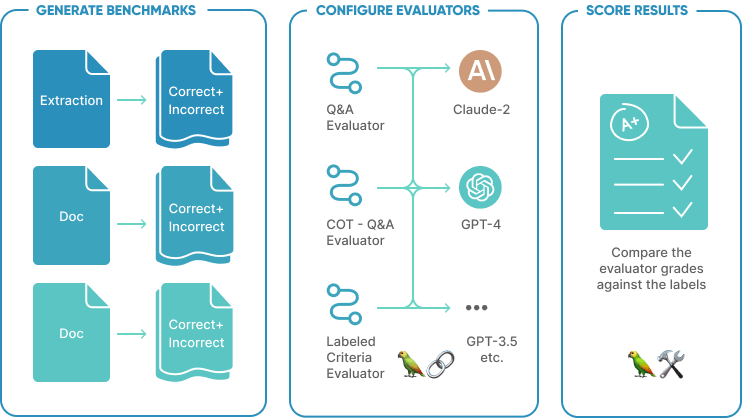

Testing and evaluation helps to expose issues, so you can decide how to best address them, be that through different design choices, better models or prompts, additional code checks, or other means.

PRO TIP: LangChain, our recommended framework for building AI systems, has many configurable evaluators built by the community. Those can, for example, help to measure the correctness of a response to a user query or question.

Overestimate AI reasoning abilities

Humans possess an innate ability to employ common sense reasoning, drawing upon shared knowledge and logical thinking to navigate the complexities of daily life. LLMs, while exhibiting some degree of reasoning, still exhibit significant disparities when compared to human cognitive abilities. It’s important to not overestimate AI reasoning abilities in these key areas:

- Deception potential: AI systems are devoid of moral or ethical agency. They lack values, intentions, and the ability to distinguish right from wrong. Consequently, they may offer responses that, while logically sound, can be misleading or even deceptive.

- Hallucinations: LLMs operate based on patterns and data from their training sets. “Hallucinations" occur when AI systems strive to provide answers despite lacking the requisite information or logical reasoning.

- Absence of contextual understanding: AI technology has not advanced to the point that it can replicate humans’ ability to draw on context and “commonsense.” Consequently, LLMs may furnish seemingly rational responses that, upon closer scrutiny, lack the depth of contextual comprehension that human reasoning brings to the table.

It's important to note that not all LLMs are created equal; every model will possess distinct strengths and weaknesses in reasoning. Researchers have made strides in quantifying LLM’s reasoning abilities, and this data can help you select an appropriate technology for your application.

PRO TIP: As large language models continue to advance, evaluation remains crucial for tracking progress and mitigating risks. Two main approaches show promising results for evaluating LLMs:

- Human evaluation - the most natural measure, but does not scale well

- Using LLMs themselves as judges (LLM-as-a-judge) to evaluate other LLMs

Read More: Evaluate LLMs and RAG using Langchain and Hugging Face

Overlook AI system scalability

Companies often pilot AI systems on a small scale without considering how those efforts will scale. Starting small is a good approach, but we recommend considering scalability from the beginning of every project so you can avoid bottlenecks and inefficiencies down the line.

For most organizations, the complexity of hosting LLMs in their own environments, availability of engineers, power requirements, and price and availability of hardware, are large impediments; almost all organizations will need to use a service from one of the large cloud providers. The main options are:

- Use a shared service where you pay based on the size of the input and output (typically measured in tokens), or

- Deploy a model onto dedicated hardware. The choice of hardware, and hence cost, is driven by the size of the LLM model.

This choice is similar to other cloud hosting services, where we choose platform as a service (PAAS), infrastructure as a service (IAAS) or Software as a Service (SAAS). Dedicated hardware is typically only economic when you have high workloads for a large part of the day.

PRO TIP: If you are developing a customer-facing application that will be heavily used 24x7, and you already have a mature DevOps and support infrastructure.

Our DevOps automation consulting services can assist you in developing a robust infrastructure to support your AI systems at scale. Contact us to learn more about how we can support your organization's growth and success.

Fail to respond to rapid technology changes

Best practices in AI product development today are different from those just 6 months ago - and certainly those 2 years ago. This space is evolving rapidly. Utilizing the latest industry AI design patterns and technologies is crucial when developing AI systems.

Here's how you can navigate this ever-changing landscape:

- Stay informed: Keep up to date with the latest trends, research, and advancements in AI design by following industry publications, attending conferences, and engaging with AI communities. This ensures that your system benefits from the collective knowledge and experience of the AI community, adheres to best practices, and remains competitive in an ever-evolving technological landscape.

- Adapt and innovate: As you learn about new AI design patterns, evaluate their applicability to your system. Be open to adopting and adapting these patterns to improve your system's performance, usability, and user experience.

- Train your team: Encourage team members to participate in training programs and workshops focused on AI design patterns. This builds a shared understanding of the latest design methodologies and ensures that the team stays current with industry standards.

PRO TIP: Some of the best newsletters that cover AI:

- The Rundown AI - quick, concise, and general overviews—rundowns—of the latest AI news daily

- The Neuron - From Pete Huang - A daily expert curation on everything going on in AI. It’s short, it’s pithy and fun

- TLDR AI - AI, ML, and Data Science in 5 Min Daily - includes research, innovation and engineering resources

Conclusion

Building a successful AI product in 2024 demands a proactive mindset, strategic planning, and a keen focus on user-centric design. By following the do's and don'ts outlined in this article, you can navigate the ever-evolving landscape of AI technology and deliver valuable, business-oriented AI solutions.

Looking for an AI software development company to help you minimize AI product development risks? At SoftKraft we work closely with our clients to understand your unique challenges and build reliable AI products faster. Reach out for a free quote!